AI-Generated Podcasts with NotebookLM

Maximum Effort, Minimum Reward now has a podcast! I mean, I didn't do any work, but Google did! Hear AI make a podcast explaining an article about AI making a podcast! Are you confused? Me too!

NotebookLM and “Deep Dive”

There’s something so validating about hearing an AI-generated NPR podcast host cover articles you wrote and say “woooOOOOOoooow.” It really feels like you’ve made it.

I’ve been playing with NotebookLM recently, which is Google’s new AI note-taking tool. Its basic functionality lets you to upload “sources,” — text files, .pdfs, etc. — aggregate that information, and allow you to ask questions about those sources to a large language model (LLM). Claude, another LLM from Anthropic, has a similar feature called “Projects” that I absolutely love. But one thing that makes NotebookLM really stand out is the “Audio Overview/Deep Dive” feature.

The Deep Dive/Audio Overview feature essentially generates a podcast episode about your sources, and it absolutely nails the NPR podcast style. It is so similar that it’s eerie — There’s two hosts, one male and one female, with classic “American media” accents, who have a back and forth conversation about your topic at the level of a general audience (complete with bad jokes, correctly-timed laughter, and ham-fisted metaphors). The voices are tuned to mimic the exact style of vocal modulation that will be instantly recognizable to anyone who listens to e.g. Planet Money. The AI hosts have all the vocal quirks of podcasting, including overuse of descriptors like “mindblowing,” “wild,” and saying “get this” before introducing a new factoid, and “you’ve got it, you’re catching on” afterwards.

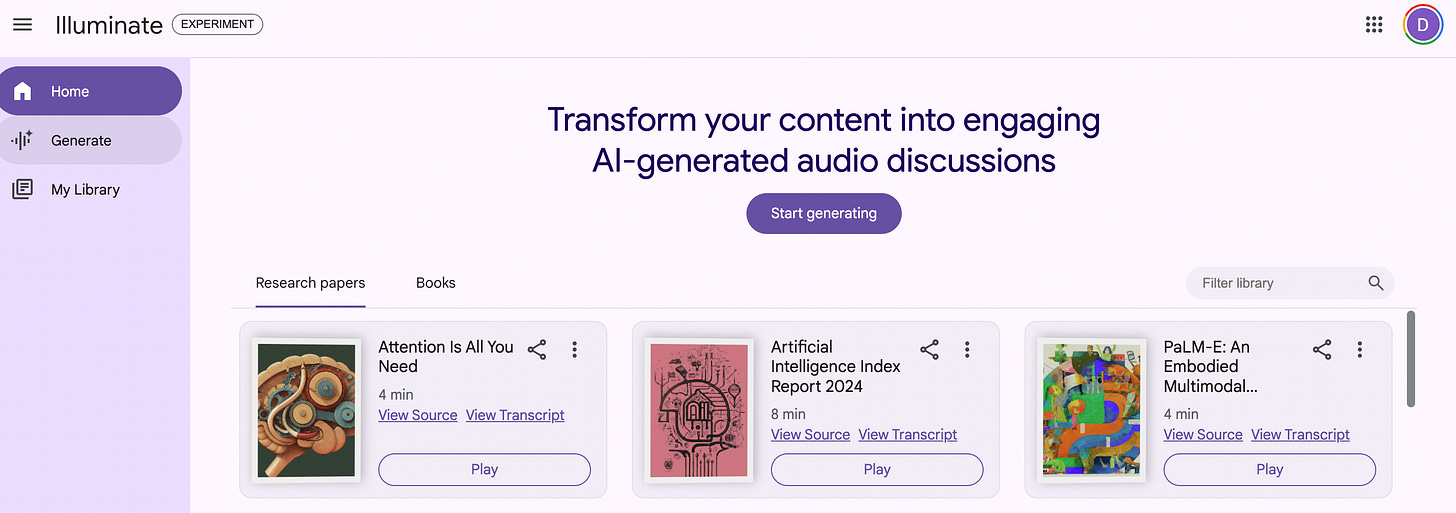

In fact there are actually two new Google tools, Google Illuminate being the other one, that generate audio overviews. Google Illuminate is slightly different in focus and features, as it only allows you to input scientific publications from ArXiv and a few other websites, and allows you to tailor the audio overview to general audiences, experts, beginners, etc. It also allows you to set the tone, and “semi-professional” seems to be the default.

Andrej Karpathy on Twitter showcases this brave new podcasting world by generating, in less than two hours, a series of ten podcasts exploring interesting historical mysteries, like the Baghdad Battery and the Roanoke Colony disappearance. I really liked them.

The audio quality is excellent, and the content (again plagiarizing Karpathy) is mediocre-to-good with “flashes of greatness.” Like most podcasts aimed at a general audience, it does a pretty decent job getting the main idea across, and absolutely refuses to end an episode without making a broad generalization with “profound” implications for the rest of life’s mysteries. Usually “life is complicated, you know? *Cue sometimes-slightly-offputting-AI-laugh*.” In general, I wish they went into a little more technical detail, with a few less metaphors.

I make fun of it, but I absolutely love this feature, and I feel as though I am in the absolute center of its target demographic. After exploring this a bit, I wanted to learn more about direct audio generation, and so I listened to the AI generated summary of a paper on AudioLM, and you know what? I actually get the general idea, terrible metaphors notwithstanding. This tool has a strong tendency to venture into "string theory is like a taco" territory, but manages to get the point across nonetheless.

I foresee a massive boom in podcast quantity.

Now of course a tool like this is just too tempting to try out on your own writing, and I caved to that temptation the second I thought of it. It is so ego-validating to hear an AI call your work “mindblowing,” regardless of how valid that assessment is. It is slightly less ego-validating when the AI hosts lose the plot a little bit and pontificate about how it’s “so crazy that Assyrian spam texts can say so much about our relationship to authority in the modern world, you know?” The training set is clearly poisoned by NPR.

So without further ado, I present to you, Maximum Effort, Minimum Reward’s new podcast! And for extra bonus meta points, I actually had it generate a podcast episode of this very blog post. That one is probably the most interesting (scroll down to the bottom of this post), as I may have hit some sort of strange recursive failure mode. Either that, or “AIs talking about themselves getting stuck in loops” is a common theme of the training data.

Minimum Effort, Audio Reward

A series of AI-generated podcast episodes on the works of Dylan Black.

This one is possibly the best example showcasing the feature. It’s got humor, it explains the topic well. Even the overgeneralization at the end is fairly relevant. Great podcast episode, 10/10, no notes.

This one has a surprise third guest! Right at the beginning, a man responds to the normal male host, instead of the female host! But it’s generally a really good and engaging summary of the blog post. The back-and-forth is done really, really well, and the jokes land well, though this article relies a bit more heavily on syllable stress than most, and the AI has trouble with stress on “tethera, methera,” etc.

This one is generally very good, because they read some of the text verbatim and nail the comedy, but leans way too hard into “fascinating” generalizations about modern life and moral pronouncements. It also describes one of the spammers as “smitten” with Ashurnasirpal, when they are clearly suspicious, not smitten. The quality drops precipitously after six minutes or so. Apparently this technique forces us to “confront our deeper relationship with sority [sic].” I think they meant authority.

The host “was watching The Devil Wears Prada recently.” Uh huh, sure you were. My favorite quote, talking about my article: “It’s more interesting than it sounds, I promise.” Ouch. It also makes a couple, ah, questionable speculations about how the sky would look if our eyes were different, and somehow turns an article about objective first-principles derivation of wavelength spectra into a metaphor about subjective points of view in The Devil Wears Prada. That’s so LLM.

This one had some of the best/worst metaphors. “It’s like trying to nail jelly to the wall! It’s just like, they’re so slippery!” It also has some pretty obvious AI audio quirks.

NotebookLM on NotebookLM

And finally, the ultimate test. What do the podcast hosts of Deep Dive think about their own audio? Can they recognize themselves? Can you, hosts? I’m talking to you directly.

Nope. In fact, it seems to think that the male host is me. It actually says “maybe one day we’ll have an AI guest host on this very show!” (About 10:00). The AI entirely makes up the content of the Ancient Egyptian and Emoji Chain Letters post, invents a fictitious History of Coffee post, and praises it extensively.

And at the two-thirds point, about ten minutes, it “ends” the episode, then continues from a middle point, restating the long digression about creativity and then goes on to talk about the AI generated episode where the AI gets stuck in a loop. It thinks the loop is a digression on creativity, which I suppose is true in a meta-sense. The English language might need more pronouns for AI talking about its own AI-generated content that it hasn’t seen yet. It’s talking about itself getting stuck in a loop, while stuck in a loop.

Compare ~9:00 and ~12:08 to see the problem, or back up about ten seconds to hear the AI’s opinion of its own loop. In the words of the AI:

9:00 “So did it pass that Turing Test on itself? Not really, it was more like it hit a wall with its own programming. It knew the format and the style were similar, but it couldn’t grasp that it was the one creating them. Like it was searching for a ghost in the machine, not realizing it was the ghost the whole time.”

That is in fact exactly what happened, AI. Maybe this is a weird meta-way of passing the Turing Test, plus some NPR metaphors, or maybe this is exactly what NPR podcast hosts in the training set think AI should say about itself.

Aside from this loop issue, the episode is unusually long, unusually boring, unusually incorrect in its facts, and unusually off-topic. I wonder if this is some actual recursive generation failure mode, or whether this article simply doesn’t have the same quantity of text content to generate with.

Still a fantastic read 10 months after publication. Sweet.

Love this! I'm planning on doing the same thing with some of the articles/deep dives I've written. I remember how excited I got when they demo'd this at Google I/O a few months back and I'm glad the actual product produces good results.