Scaling Problems in Social Control

In which I discuss Reddit engagement statistics, angry moderators, Dunbar's number, and dive headfirst into pseudoscience.

Every form of social control has an inherent scaling problem. Take content moderation, which has been in the news a lot lately. The more people you have to control, the more draconian and less nuanced you must be.

Why am I writing about this?

Over the course of four days, I posted my article on the linguistics of Stargate to three different subreddits (sub-communities existing on reddit.com) that I thought might enjoy them. These were:

/r/AncientEgyptian (one of my favorite subreddits, with a lovely community)

/r/linguistics (another favorite)

/r/moviedetails (we’ll get to that)

/r/AncientEgyptian and /r/linguistics seemed to really like my article. I got lots of positive engagement and some fun and insightful comments. Then, I posted my article on the /r/moviedetails subreddit. A few minutes later, I was permanently banned from the subreddit, and got this message from the moderators.

Now, I do understand where this mod is coming from. Imagine a (popular) forum with zero content moderation whatsoever — it would likely be overrun by bots. Some form of content moderation is necessary to prevent total madness.

But my article is not the type of spam these rules are meant to exclude. Algorithmic application of single-strike rules totally absolves the moderator-bureaucrat from any responsibility for nuanced thought and judgment. It makes us into machines. I realize I’m extrapolating a lot from a movie details subreddit, but damn it, I’m annoyed, and reddit moderation is really annoying.

Okay, pontificating over, let’s look at some data.

Popular Subreddits Have Worse Engagement (For Me)

From personal experience posting my blog articles (and other, miscellaneous topics) on reddit over the course of the last year, I’ve noticed a distinct difference in positive content engagement and aggressive moderation between large and small communities.

Large communities have draconian, one-strike moderation, are much less welcoming, and give poorer responses per community member. Small communities have welcoming, pleasant atmospheres, better engagement per community member, and very little moderation.

Here are the statistics on my reddit usage over the past two years:

There are two kinds of actions one can take in response to a post on reddit, you can upvote/downvote the post, or you can comment on the post. Here’s what that looked like for me, vs. subreddit size:

Let’s combine upvotes, downvotes, and comments into a figure of merit I call “engagement.” Engagement with a post should meaningfully combine upvotes, downvotes and comments. It makes sense to me to weight a comment higher than an upvote in terms of engagement, as a comment represents more effort involved engaging with the post. Further, I’m going to stick with reddit’s convention and subtract downvotes from upvotes.

After some experimenting with multiplicative vs. additive figures of merit, and finding basically no difference in the conclusion, I chose:

Engagement = Upvotes - Downvotes + 10*Comments.

Looking at the per-post, per-subscriber engagement, vs. number of subreddit subscribers roughly measures the positive response to a post vs. the community size. For example, the Ancient Egyptian subreddit community has 4,800 subscribers at the time of this post. So, if a post of mine has 5 “karma” (defined as upvotes - downvotes) and 4 comments, the engagement for this post on /r/AncientEgyptian would be 5 + 10*4 = 45.

Each dot in the following plot represents a subreddit community, plotted vs. # of subscribers to that community.

We see a strong negative trend. The larger the community, the worse engagement I got, and the trend looks exponential-ish.

Let’s also zoom in on the “fraction of posts removed” plot from earlier:

I notice a pretty sharp increase in somewhere between 10^5 and 10^6 subscribers, with an inflection point at ~300,000. Posting on larger subreddits gives you less engagement per subscriber, even though far more people should see your post, and you also run a much larger risk of simply getting your post removed. What’s going on here?

Let me play the null-hypothesis advocate. In the majority of “large” subreddits, I only made one post, which is difficult to do statistics on. Further, I tended to post my articles first in smaller subreddits that I actually liked, and only then in larger subreddits. The /r/moviedetails mod I communicated with (above) explicitly says that they check post history to remove “spammers,” so, it’s entirely possible that this graph is merely a graph of when I trigger an automated spam filter. In other words, there are a ton of potential confounding factors.

But despite this, I think these graphs are suggestive of a real effect, and a strong one too — large communities are much worse at positive engagement with ideas, in my case, very silly ideas.

Some Regimentation Required

Some regimentation was necessary to prevent bedlam.

-Frank Bunker Gilbreth Jr. and Ernestine Gilbreth Carey, Cheaper by the Dozen

The quote above, from Cheaper by the Dozen (the book, not the movie), is from a woman with eleven siblings, explaining that their father had to implement some aspects of assembly-line parenting to get everyone out the door in the morning.

I think that we’ve all experienced something like this. Figuring out where to eat with your three friends is easy — it’s low-stakes, and there’s only a few people involved. Everyone can pretty easily give their opinion, and reach a consensus before you all get too hungry. But try to apply the same freeform discussion method to feeding a football stadium with 100,000 people. It won’t work. You can’t even hear whoever is speaking at the time, and we’d all starve before everyone had their say.

Let’s examine this problem in general, taking as an axiom that social control mechanisms, including representative democracy, reddit moderation, etc., must get harsher and less nuanced as group size increases. How large can a decision-making community become before social control mechanisms become draconian by necessity?

In Which I Speculate Wildly from No Evidence

Size and Democracy, by Robert A. Dahl and Edward R. Tufte, claims that the limit of direct democracy depends upon the communication channel capacity of the community involved. Let us consider a community that interacts by direct person-to-person communication, by speech or by text, that has no explicit rules governing conduct, and relies basically on mutual trust and goodwill.

How many people can be in this community with no real “rules” explicitly laid out? Consider Robin Dunbar, a British anthropologist most famous for proposing Dunbar’s number (Wikipedia link), which is:

a suggested cognitive limit to the number of people with whom one can maintain stable social relationships—relationships in which an individual knows who each person is and how each person relates to every other person.

Basically, Dunbar used a regression model on primate group size to predict that each human can comfortably maintain ~150 stable social relationships, and that this should predict the average group size of a human group.

Dunbar's surveys of village and tribe sizes also appeared to approximate this predicted value, including 150 as the estimated size of a Neolithic farming village; 150 as the splitting point of Hutterite settlements; 200 as the upper bound on the number of academics in a discipline's sub-specialisation; 150 as the basic unit size of professional armies in Roman antiquity and in modern times since the 16th century, as well as notions of appropriate company size.

Let’s take this number (150) as a proxy for the maximum size of a trusted group of any given person.

How far does this trust extend? Let’s imagine that I mostly trust my 150 friends to discuss and debate in good faith. I also tend to trust my “friends-of-friends,” because they are vouched for by a friend. But I don’t really trust my “friends-of-friends-of-friends,” as I have no trusted friend who can vouch for them. So, I claim that the “degree of separation” to which trust extends is somewhere between 2 and 3. Let’s call it 2.5.

I conceptualize this as a graph.

Each node will be a group member, and each edge will represent a weak trust-based relationship, i.e. you kinda know this person.

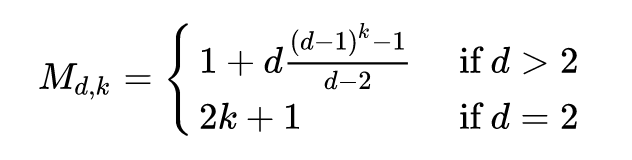

The maximum community size for a person-to-person trust-based community, then, is the largest possible graph such that each node is separated by no more than 2.5 edges, and each node is connected to 150 other nodes. This problem is well-studied in graph theory, and is called the degree-diameter problem. The maximum size of such a graph M is given by the Moore bound:

d is the number of edges-per-node (150 in this case), and k is the distance between any two nodes. Plugging in d = 150 (Dunbar’s number) and k = 2.5 (maximum number of edges between any two nodes), I find M = 274,660. Incidentally, the Moore bound is very close to the naive bound d^k, which gives me 275,568.

So, using this highly speculative model of human relations, I find that the maximum group size where decision-making occurs via direct person-to-person communication, where each person can mostly trust that the others are discussing in good faith by virtue of having some sort of social relationship, is roughly 300,000.

Let’s see those reddit statistics for my posts again.

The inflection points on these graphs, where my posts start getting removed for bullshit reasons and my positive engagement score starts dropping rapidly, is in fact right around 300,000!

Now before everyone gets annoyed with me for my total lack of rigor and totally unfounded speculation, I want to state for the record that this is overwhelmingly likely to be a total coincidence, and that my N = 10 experiment on myself proves absolutely nothing.

Buuuut, I think that something like this general rule should apply. I think that consensus building without explicit, harsh rules to keep everyone in line should have some sort of practical size limit, it should be based on human biology and the information capacity of the communication channel, and it should be a relatively low number. The limit must be higher than three people (from personal experience alone), and we also know that it’s functionally impossible to listen to a billion people each give their individual, nuanced opinions, and so the theoretical limit should be somewhere inside these bounds.

In Conclusion, Scaling Problems

Okay, so I want to be crystal clear. I do not actually think that my personal experience with reddit mods is sufficient evidence for sweeping conclusions about maximal human group size. I do, however, think that the general trend where large groups are less fun than small groups is valid, and I also think it’s pretty obvious that small communities can exist harmoniously without harsh social control measures that large communities seem to develop.

Scaling problems like this are universal. Political systems that are valid for hunter-gatherer tribes of 150 people may not be valid for a billion people. Arbitrarily scalable solutions for social or engineering problems basically don’t exist, even physical laws do not appear to be arbitrarily scalable (theories of quantum gravity are hard). Why should human group size be any different? And so, with all the caveats I’ve mentioned, I’m going to propose a theoretical limit on the scale of a “nice” community of people to talk to. It’s 300,000 people. Why not?

Arbitrary decisions from idiot mods have been around as long as there have been online communities. This same dynamic played out in 8-member IRC chat rooms back in 1995.

It comes down to a question about the purpose of moderation and the moderators. Do they want to nurture and encourage a community, or are they there to act as lobotomized rule-followers with bad attitudes and no cultural norms to weed out the arbitrary bad decisions?

Reddit, like all the big social companies, has opted for the latter. It's not a problem of moderation in itself (as you say this is necessary), but having dull and capricious people with their hands on the levers.

This is a really interesting idea! The exponent of 2.5 is a little non-rigorous but the idea, I think, is fundamentally very good.

As a lower bound, you can probably say you can trust your friends-of-friends to have good faith discussions and as an upper bound, you can probably expect that friends-of-friends-of-friends is when you'll start to need to handle scaling issues, which gives a range of about 20k to 3M.

It'd be interesting to see if there could be some way of testing what this number is empirically.