Voltorb Flip: Overanalyzing a Minigame in Pokemon HeartGold and SoulSilver -- Part 3

In which I learn that Tesseract is difficult to use, and build the least robust OCR engine ever.

This post is Part 3 of a four-part series on Voltorb Flip, a minigame found in Pokemon HeartGold and SoulSilver. Here are parts 2.5, 2, and 1.

Optical Character Recognition

Let’s pretend that, for whatever reason, one has decided to play many, many games of Voltorb Flip, and wants to win. Maybe you really want to buy a Shinx.

Let’s also say that you’ve gotten really, really tired of typing in the number of points and Voltorbs into your algorithm. What can you do? I suppose you could take apart the game code and directly read what’s being broadcast to the screen, but it’s probably simpler to build an Optical Character Recognition engine. An OCR engine will read the digits from the screen output just like a human would. This should be easy, right?

Tesseract is confusing

That’s what I thought, anyway. I googled “OCR Engine,” and the first non-ad result was Tesseract, which was originally developed in the late 80s as an OCR Engine. Hopefully it works out-of-the-box.

It’s a pretty simply command line tool. Once you install it, you can give commands like this to Terminal:

Screenshot.png out --psm 3This command loads “Screenshot.png,” uses “page segmentation mode 3” to partition the image into characters, and prints the results to “out.txt” in the same directory. Let’s try it out on this image:

The out.txt result gives:

OU CANIM

3531

Earned coins:

Lewe| dqThat’s actually not too bad! Seems to mostly work, needs a little refinement. There’s also a “digits” option that forces the output to consist of numbers only. If we use that, Tesseract outputs only “3531.” That’s a little concerning, as it totally skips the very obvious zeros and the nine. Let’s try it out with this image, which is only digits (and a Voltorb):

It throws an “Empty page” error. What if we try manually thresholding the image in photopea?

Same “Empty page” error. Uh oh.

To summarize the following tedious process, I installed a python wrapper for Tesseract, PyTesseract, and spent a long time attempting to threshold and blur the images to the point where PyTesseract would correctly identify all nine digits. It didn’t work — in order to use Tesseract for this application, I would have to re-train the engine on the Voltorb Flip font.

Caveman OCR

Instead, let’s try the dumbest thing imaginable — let’s manually create a library of images (a set of kernels) with each possible digit, partition the Voltorb Flip board into sub-images, and compare each sub-image to our library of digits. This method almost has to work.

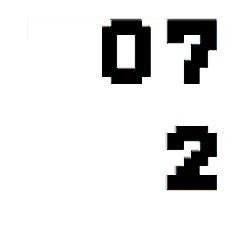

Here’s my appropriately thresholded and binarized digit library:

To use it, I read in a Voltorb Flip board image, threshold and binarize it:

Then I partition the image into the 20 separate sub-images, one for each number I want to recognize. This is the “partition” I’m testing against my digit library:

Finally, I cross-correlate each partition with each digit from my digit library, and choose the digit with the best overlap. For the previous board, this program outputs the following statistics:

It actually works! There’s just two problems:

It’s incredibly slow, taking roughly three minutes to recognize twenty digits.

8 and 3 have perfect overlap — all filled pixels in the three digit are also filled in the eight digit. Consequently, my algorithm mistakes eights for threes.

The solution to the first problem was simple — I downsampled all images and kernels by 10 before cross-correlating, obtaining a 100-fold improvement in speed. Done!

The solution to this second problem was more interesting. For each image I want to read, say “7,” my OCR engine compares against all digits in the library, 0 to 9, and returns a vector of “overlap scores,” which correspond to the similarity between the image and each digit in the library.

For example, I did a cross correlation of “7” against the numbers (2, 3, 4, 5, 6, 7, 8, 9, 10) from my digit library. It returns this vector:

[0.767 0.839 0.732 0.839 0.843 0.993 0.843 0.843 0.634]The highest score, 0.993, corresponds to the 7 digit, thus my algorithm returns 7. The problem occurs when this high score is not unique — for “8,” my algorithm gives the same similarity score to both 8 and 3.

Fortunately, the vector of output scores is unique.

So, I constructed a simple lookup table for score vectors, where I pre-define the score vector expected for each digit. There are only fifteen possible cases, so this only took a few minutes, and it has 100% accuracy so far. This solution isn’t robust to any variation in the Voltorb Flip board image, because any variation at all will produce a slightly different score vector and cause this to fail. But this method doesn’t need to be robust, because each instance of the same digit is always pixel-by-pixel identical.

Interestingly, you don’t need to compare your partition against the entire library of digits — correlating your partition against some subset of the digit library is sufficient to arrive at a unique score vector using this method. Convolving images against a standard kernel library is an old technique in OCR, and in hindsight, probably the one I should have chosen in the first place. That would also be significantly more robust, if properly implemented in, for example, a neural net. But who cares? I have a fast, 100% accurate OCR method for reading in the game statistics, and an algorithm for playing with 71% accuracy! Let’s play some Voltorb Flip!